Modern NVIDIA GPU Transcoding Comparison

There is nothing quite like new hardware. I'm always looking for ways to speed up my daily video processing so GPUs are always top of mind. Unfortunately I've never seen the kind of numbers I want from NVIDIA to know whether or not it's worth buying the latest and greatest of their data center class cards for encoding/decoding/transcoding/etc. When the marketing info comes out for a new card like the fairly new NVIDIA L4 you are left wondering how it stacks up against your particular challenge. I don't have 8 - L4s or a need to handle 1040 simultaneous 720p30 streams. What I do have is a dozen 4K streams that I need reduced to 1080p for longer term storage. I'd like to know how well a new L4 or even A2 card works for my purposes compared to my older cards like a GTX 1660 Ti or ancient GTX 1080.

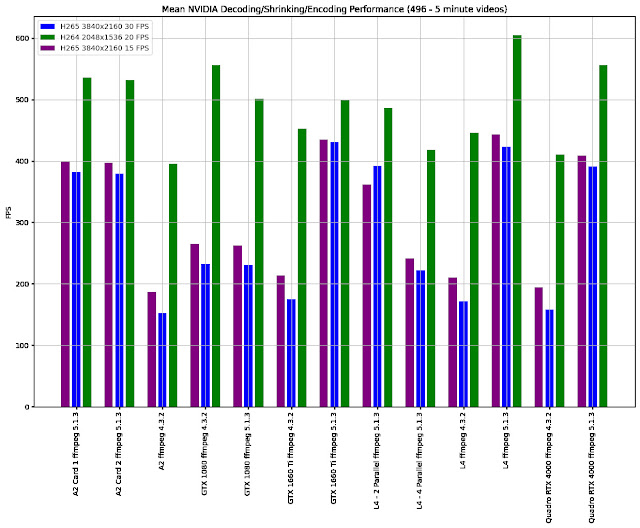

Now that I've dumped the money into those cards I was surprised to see that the performance was not all that different compared to my oldest card which is a Pascal generation GTX 1080. At this point it was unclear if it was just the fact that the GTX 1080 had dual encoder chips or maybe because I was still running FFMPEG 4.3.2. I finally decided to pull and build the latest version of FFMPEG which was 5.1.2 at that time. Rerunning all tests it became clear to me that what I once thought was my "flagship" card for encoding was actually pretty outdated with the new software. All of my cards performed much better. The following graphic illustrates those numbers.

Note: For all the data below the FFMPEG command is roughly:

ffmpeg -vsync vfr -hwaccel_device 0 -i in.mp4 -vf "scale_npp=iw/2:ih/2" -vcodec hevc_nvenc -b:v 2M -r 15 out.mp4

It's probably worth a little explanation of the workload. The data is comprised of 496 video files that are 5 minutes in length. The breakdown is approximately:

- 100 - 4K 30 FPS HEVC videos

- 300 - 4K 15 FPS HEVC videos

- 100 - 5 MP 20 FPS H264 videos

Outside of the gains of the Turing, Ampere, and Ada Lovelace generation cards it's noteworthy that performance didn't really change for the Pascal generation GTX 1080 that I once consider my "flagship" cards.

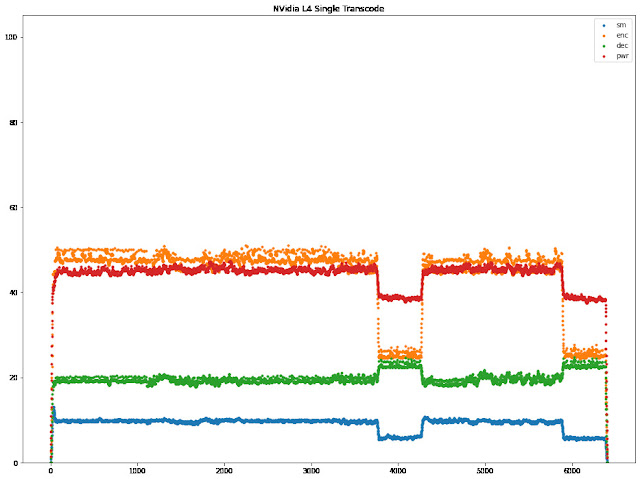

Getting the most with the new version of FFMPEG

With the L4 card having 2 encoders and 4 decoders I found it necessary to throw more than one simultaneous transcode to get the most performance. It seemed that 2 would be optimal but I've found that when processing H264 video that the utilization of the card would drop as the nvenc/nvdec seem to be able to handle more where HEVC would saturate the nvenc/nvdec chip. You can see the stats during the processing of the prior mentioned dataset using a single transcode at a time. All stats are for utilization are from "nvidia-smi dmon -d 1".

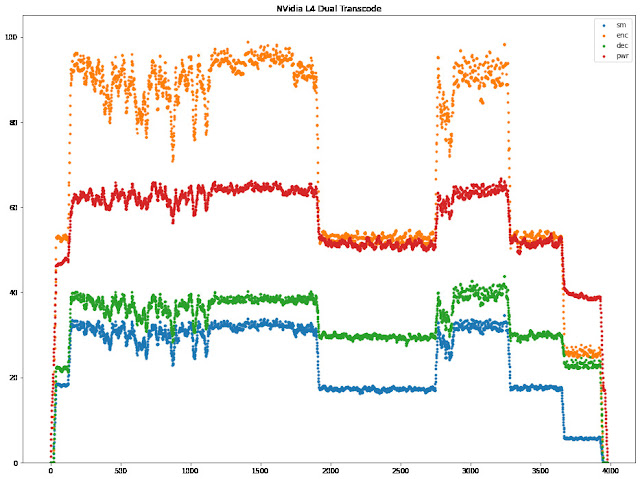

The drop around 4000 seconds and 6000 seconds are the prior mentioned H264 datasets. Looking at two simultaneous transcodes you can see the processing time is reduced but not in half.

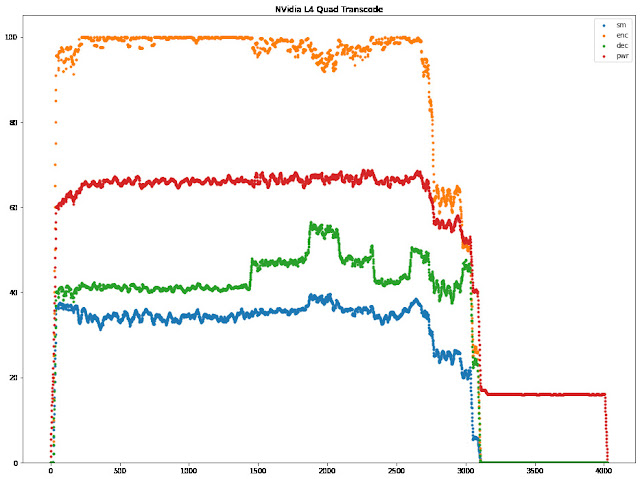

Finally I ran a test with 4 simultaneous transcodes and found the timeline dropped to about half of the original.

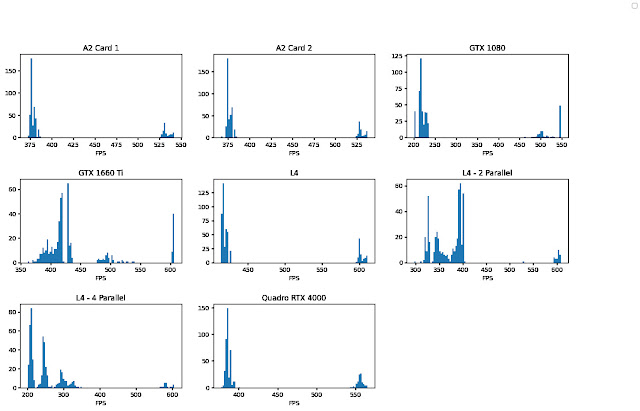

Given the above "optimal" processing on the L4 I produced some histograms of the FPS that were achieved for all of the prior mentioned cards as well as the various runs on the L4.

It's clear with 2 and 4 in parallel transcodes on the L4 there is more variability and the average FPS is lower but the overall dataset processes faster.

Conclusion

1. With NVIDIA chipsets is wise to keep upgrading as FFMPEG and the NVIDIA Video Codec SDK is always making performance improvements.

2. An optimal amount of simultaneous transcode processes depends on the codec so some trial and error is necessary.

3. Most nvenc/nvdec chips process roughly around the same rate. I've always thought this would not be the case. In the future I may try to dig deeper on this.

External Resources

You can always consult the matrix they have to know how many encoder and decoder chips are available per card at: https://developer.nvidia.com/video-encode-and-decode-gpu-support-matrix-new

No comments:

Post a Comment